Justin Kerr

I'm a 4th year PhD at Berkeley's AI Research Lab, co-advised by Angjoo Kanazawa and Ken Goldberg. My research focuses on advancing robot perception for real-world manipulation through 3D multi-modal understanding, 4D reconstruction, tactile sensing, and active vision. I also maintain Nerfstudio, a large open-source repo for 3D neural reconstruction, and my work is supported by the NSF GRFP.

Before Berkeley, I did my undergrad at CMU where I worked with Howie Choset on multi-robot path planning. I also spent time at Berkshire Grey on warehouse bin-packing automation and NASA JPL, where I was lucky to be involved in early work on a moon rover tech demo.

Papers

GaussGym: An open-source real-to-sim framework for learning locomotion from pixels

Alejandro Escontrela, Justin Kerr, Arthur Allshire, Jonas Frey, Rocky Duan, Carmelo Sferrazza, Pieter Abbeel

arXiv 2024

arXiv / Website A real-to-sim framework for training robot locomotion policies from RGB pixels in IsaacGym using Gaussian splatted scenes.

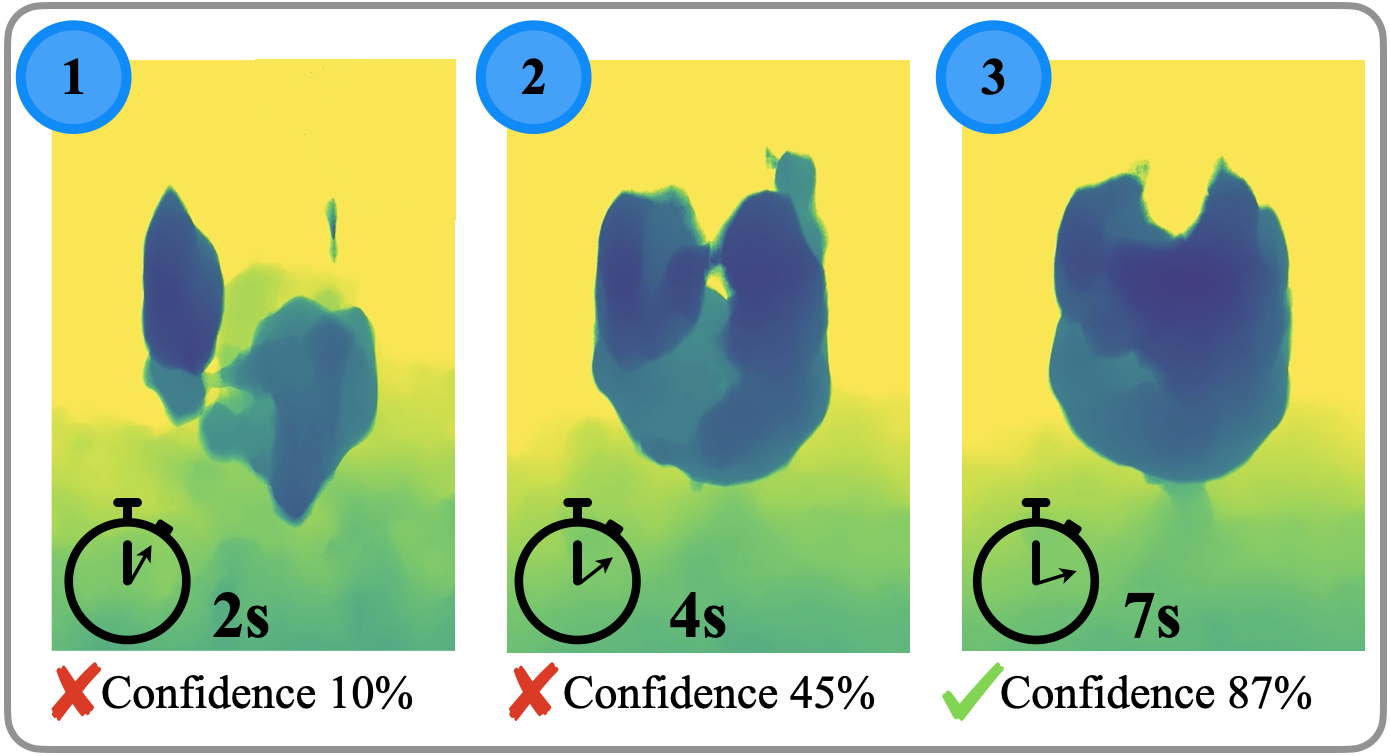

Eye, Robot: Learning to Look to Act with a BC-RL Perception-Action Loop

Justin Kerr, Kush Hari, Ethan Weber, Chung Min Kim, Brent Yi, Tyler Bonnen, Ken Goldberg, Angjoo Kanazawa

CoRL 2025

arXiv / Website We train a robot eyeball policy to look around to enable the performance of a BC arm agent. Eye gaze emerges from RL by co-training with the BC agent and rewarding the eye for correct arm predictions.

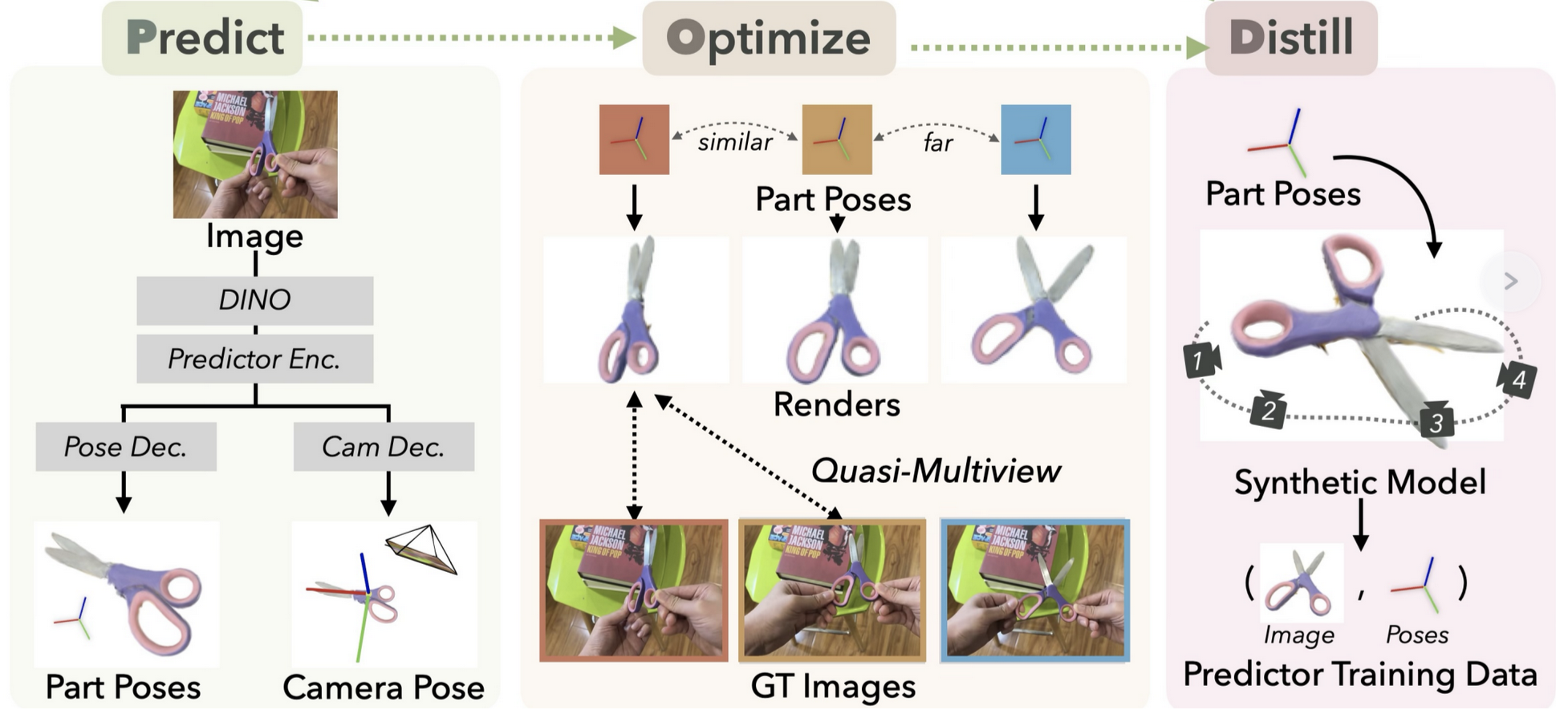

Predict-Optimize-Distill: A Self-Improving Cycle for 4D Object Understanding

Mingxuan Wu*, Huang Huang*, Justin Kerr, Chung Min Kim, Anthony Zhang, Brent Yi, Angjoo Kanazawa

ICCV 2025

arXiv Website A self-improving framework that builds intuition for predicting 3D object configurations through iterative observation, prediction, and optimization cycles.

Persistent Object Gaussian Splat (POGS) for Tracking Human and Robot Manipulation of Irregularly Shaped Objects

Justin Yu*, Kush Hari*, Karim El-Refai*, Arnav Dalal, Justin Kerr, Chung Min Kim, Richard Cheng, Muhammad Zubair Irshad, Ken Goldberg

ICRA 2025

arXiv Tracking and manipulating irregularly-shaped, previously unseen objects using a single stereo camera with Gaussian splatting for object pose estimation and language-driven manipulation.

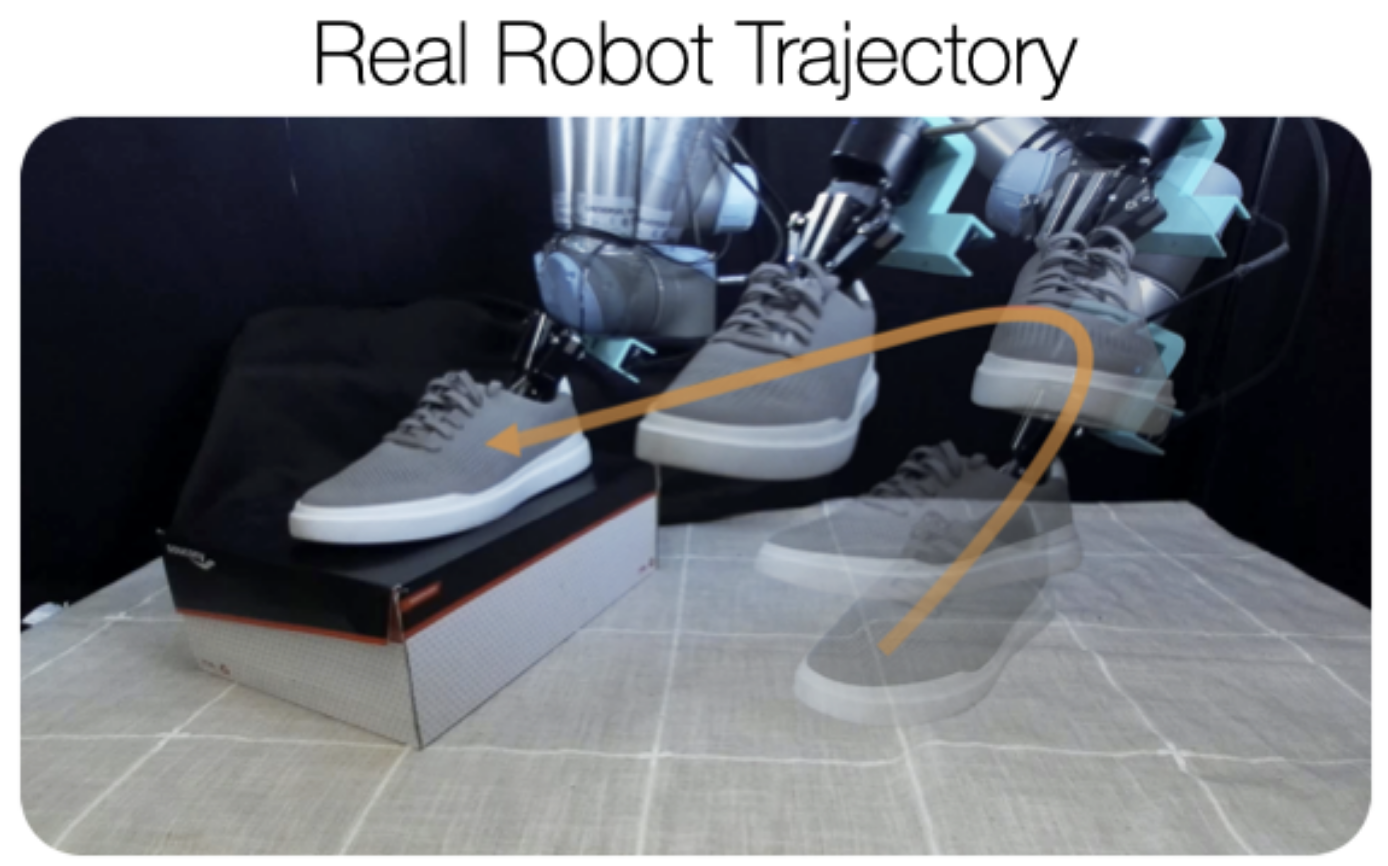

Robot See Robot Do: Imitating Articulated Object Manipulation with Monocular 4D Reconstruction

Justin Kerr*, Chung Min Kim*, Mingxuan Wu, Brent Yi, Qianqian Wang, Ken Goldberg, Angjoo Kanazawa

CoRL Oral 2024

arXiv Website Object-centric visual imitation from a single video by 4D-reconstructing articulated object motion and transfering to a bimanual robot

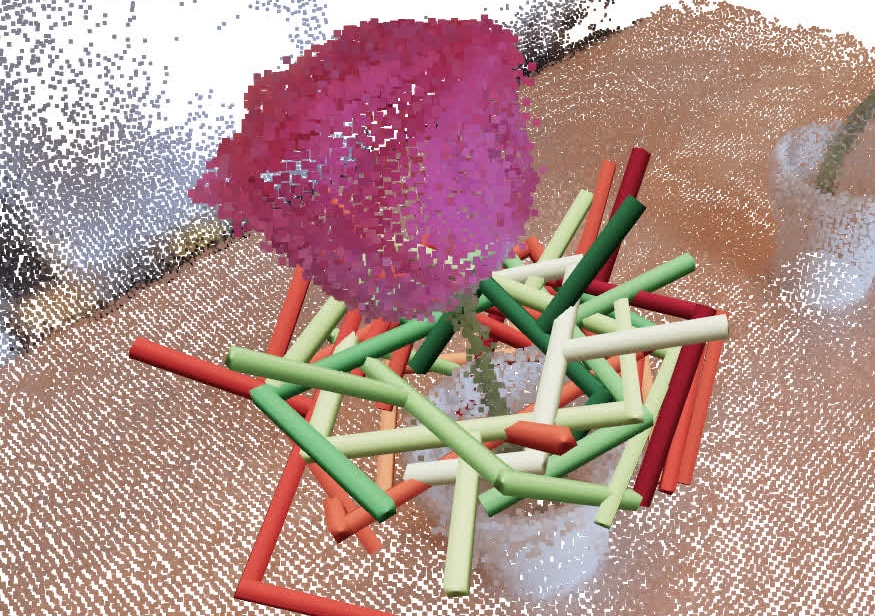

GARField: Group Anything with Radiance Fields

Chung Min Kim*, Mingxuan Wu*, Justin Kerr*, Ken Goldberg, Matthew Tancik, Angjoo Kanazawa

CVPR 2024

arXiv Website Hierarchical grouping in 3D by training a scale-conditioned affinity field from multi-level masks

LERF-TOGO: Language Embedded Radiance Fields for Zero-Shot Task-Oriented Grasping

Adam Rashid*, Satvik Sharma*, Chung Min Kim, Justin Kerr, Lawrence Yunliang Chen, Angjoo Kanazawa, Ken Goldberg

CoRL Oral, Best Paper Finalist 2023

arXiv Website LERF's multi-scale semantics enables 0-shot language-conditioned part grasping for a wide variety of objects.

Nerfstudio: A Modular Framework for Neural Radiance Field Development

Matthew Tancik, Ethan Weber, Evonne Ng, Ruilong Li, Brent Yi, Justin Kerr, Terrance Wang, Alexander Kristoffersen, Jake Austin, Kamyar Salahi, Abhik Ahuja, David McAllister, Angjoo Kanazawa

SIGGRAPH 2023

arXiv Website GitHub A modular PyTorch framework for Neural Radiance Fields research with plug-and-play components and real-time visualization tools.

LERF: Language Embedded Radiance Fields

Justin Kerr*, Chung Min Kim*, Ken Goldberg, Angjoo Kanazawa, Matthew Tancik

ICCV Oral 2023

arXiv Website Grounding CLIP vectors volumetrically inside a NeRF allows flexible natural language queries in 3D

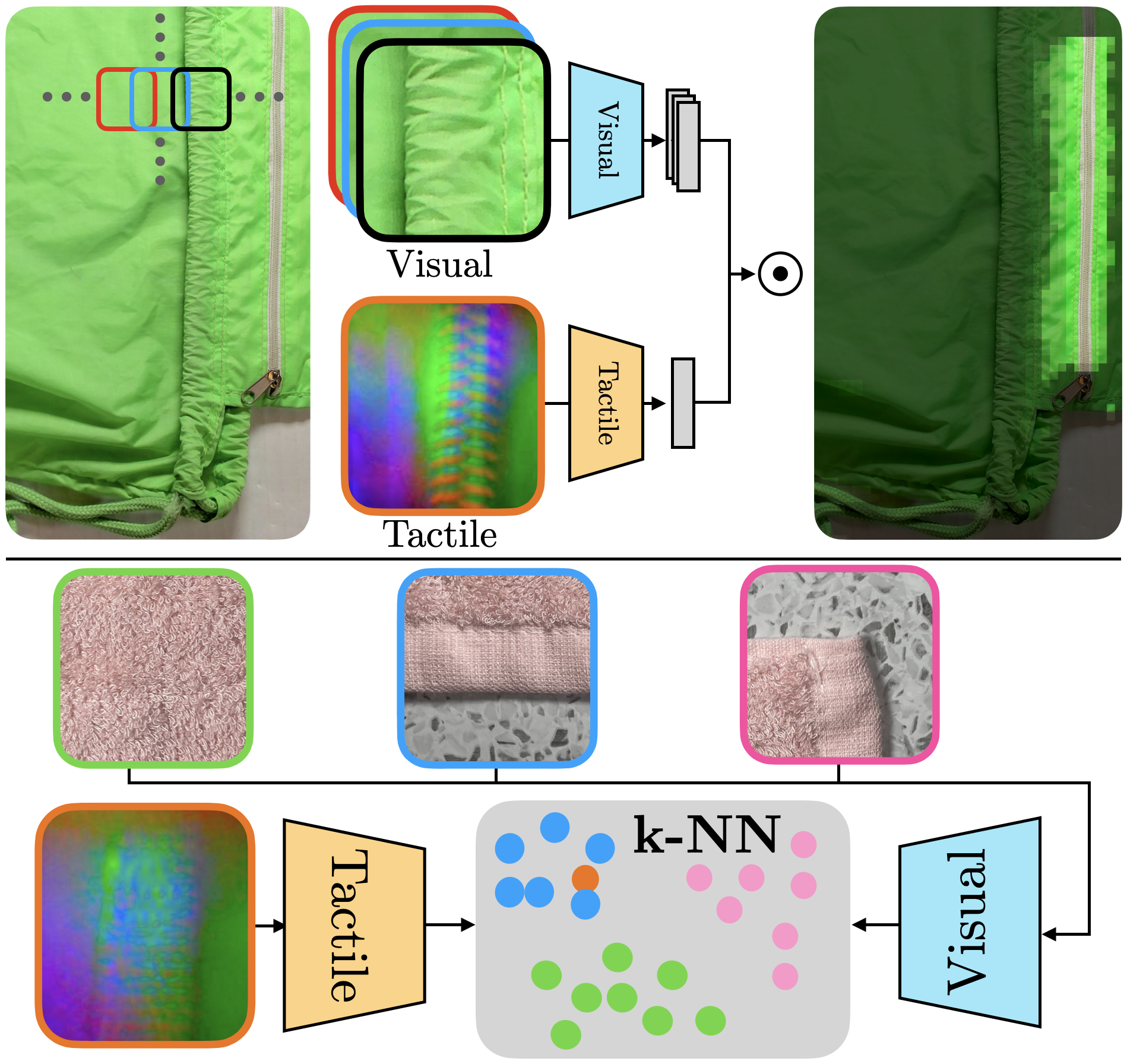

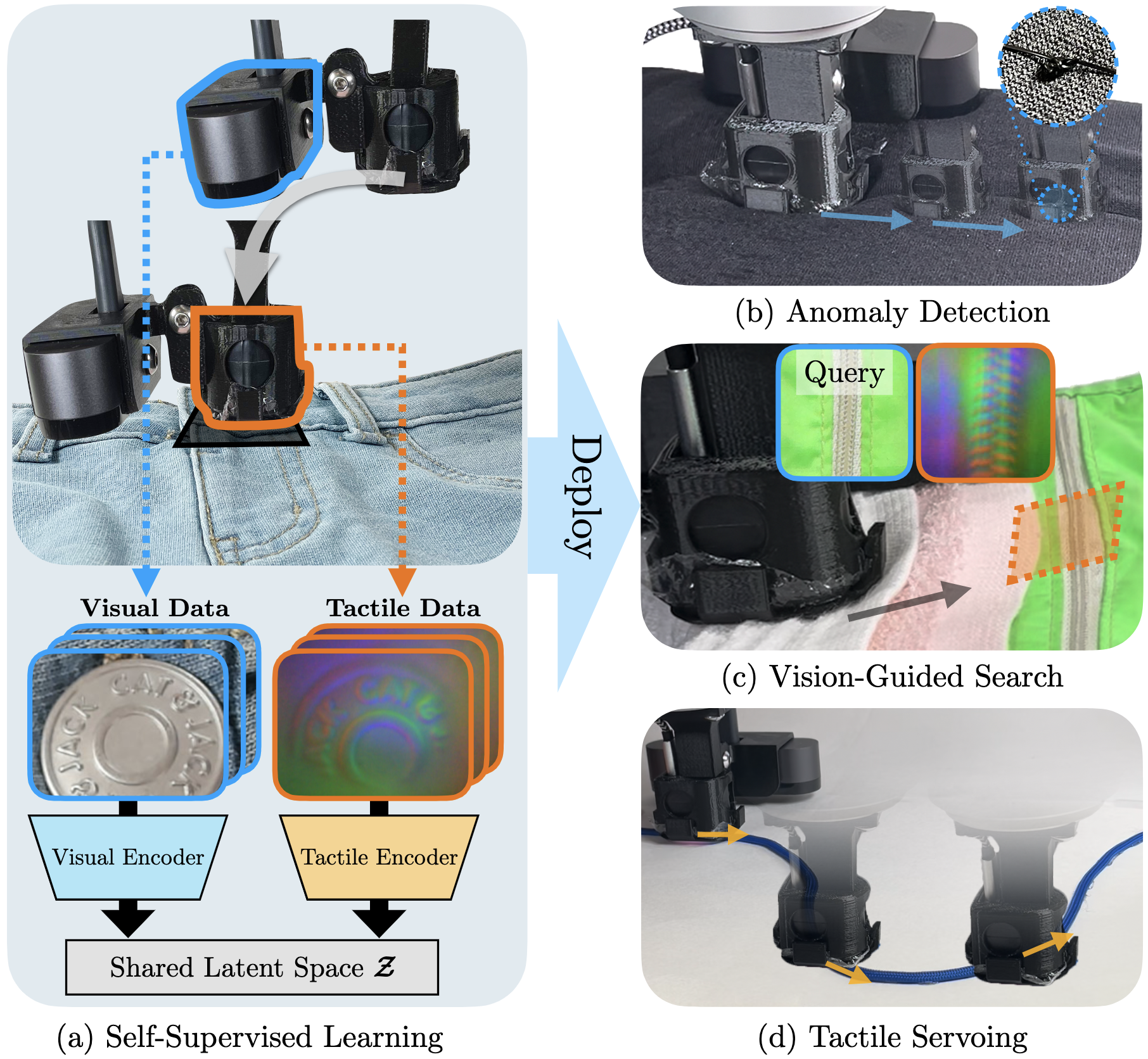

Self-Supervised Visuo-Tactile Pretraining to Locate and Follow Garment Features

Justin Kerr*, Huang Huang*, Albert Wilcox, Ryan Hoque, Jeffrey Ichnowski, Roberto Calandra, and Ken Goldberg

RSS 2023

arXiv Website We collect spatially paired vision and tactile inputs with a custom rig to train cross-modal representations. We then show these representations can be used for multiple active and passive perception tasks without fine-tuning.

Evo-NeRF: Evolving NeRF for Sequential Robot Grasping

Justin Kerr, Letian Fu, Huang Huang, Yahav Avigal, Matthew Tancik, Jeffrey Ichnowski, Angjoo Kanazawa, Ken Goldberg

CoRL Oral 2022

Website OpenReview NeRF functions as a real-time, updateable scene reconstruction for rapidly grasping table-top transparent objects. Geometry regularization speeds and improves scene geometry, and a NeRF-adapted grasping network learns to ignore floaters.

All You Need is LUV: Unsupervised Collection of Labeled Images using Invisible UV Fluorescent Indicators

Brijen Thananjeyan*, Justin Kerr*, Huang Huang, Joseph E. Gonzalez, Ken Goldberg

IROS 2022

Website arXiv

Fluorescent paint enables inexpensive (<$300) and self-supervised data collection of dense image annotations without altering objects' appearance.

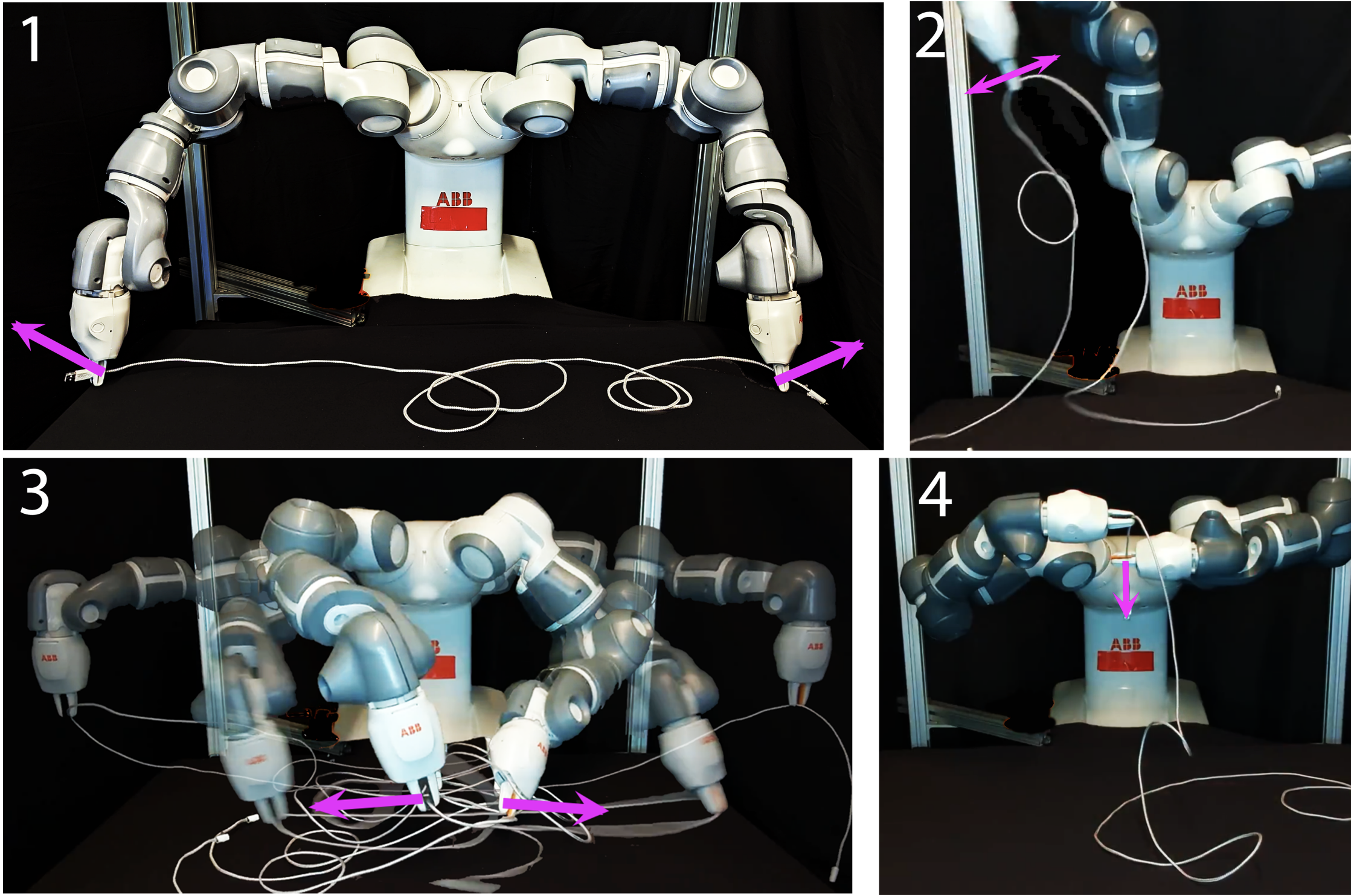

Autonomously Untangling Long Cables

Vainavi Viswanath*, Kaushik Shivakumar*, Justin Kerr*, Brijen Thananjeyan, Ellen Novoseller, Jeffrey Ichnowski, Alejandro Escontrela, Michael Laskey, Joseph E. Gonzalez, Ken Goldberg

RSS Best Systems Paper Award 2022

Website Paper

A sliding-pinching dual-mode gripper enables untangling charging cables with manipulation primitives to simplify perception coupled with learned perception modules.

Dex-NeRF: Using a Neural Radiance Field to Grasp Transparent Objects

Jeffrey Ichnowski*, Yahav Avigal*, Justin Kerr, Ken Goldberg

CoRL 2021

Website arXiv

Using NeRF to reconstruct scenes with offline calibrated camera poses can produce graspable geometry even on reflective and transparent objects.

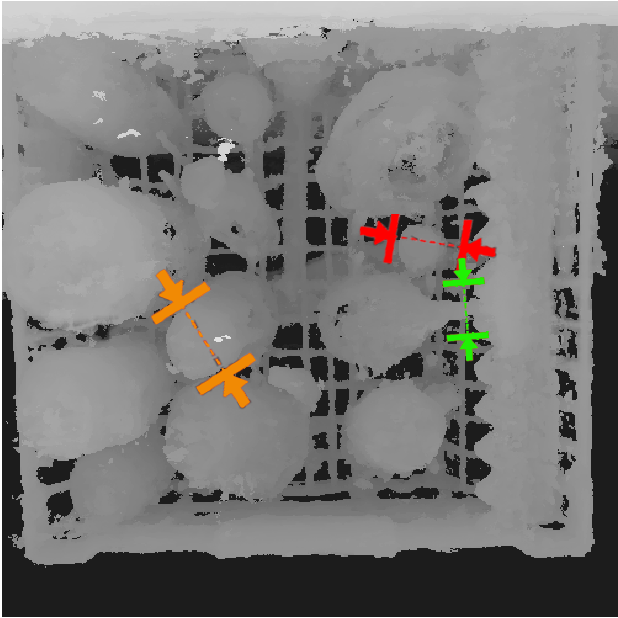

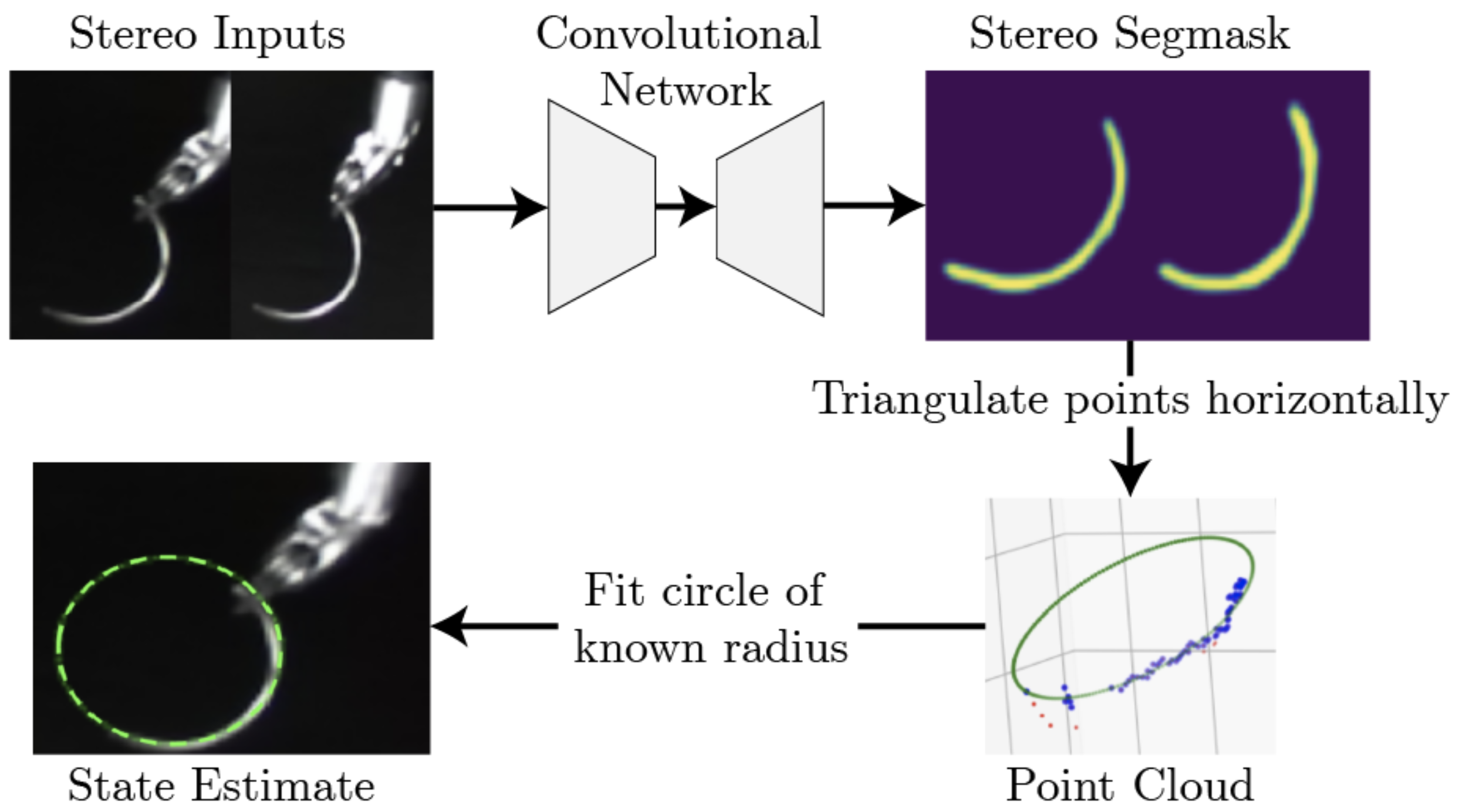

Learning to Localize, Grasp, and Hand Over Unmodified Surgical Needles

Albert Wilcox*, Justin Kerr*, Brijen Thananjeyan, Jeff Ichnowski, Minho Hwang, Samuel Paradis, Danyal Fer, Ken Goldberg

ICRA 2022

Website arXiv

Combining active perception with behavior cloning can reliably hand a surgical needle back and forth between grippers.

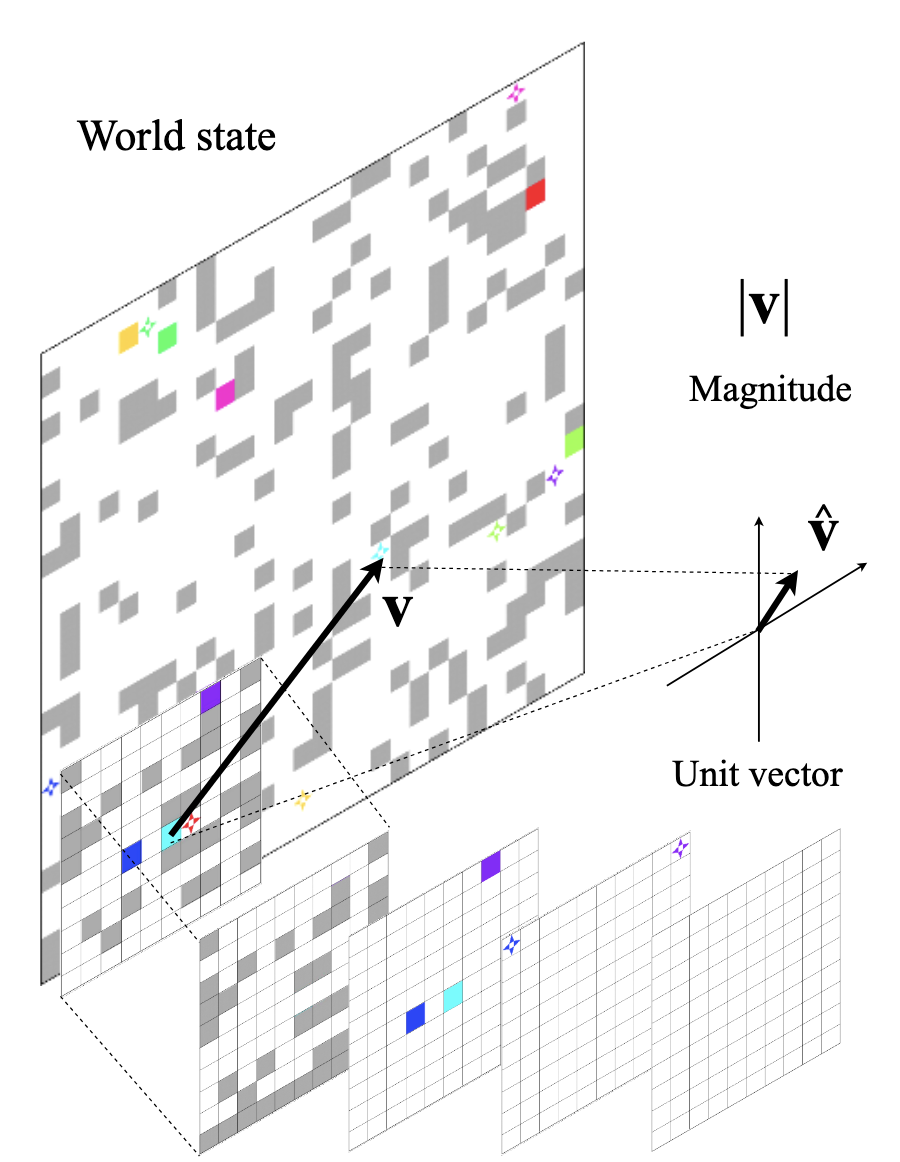

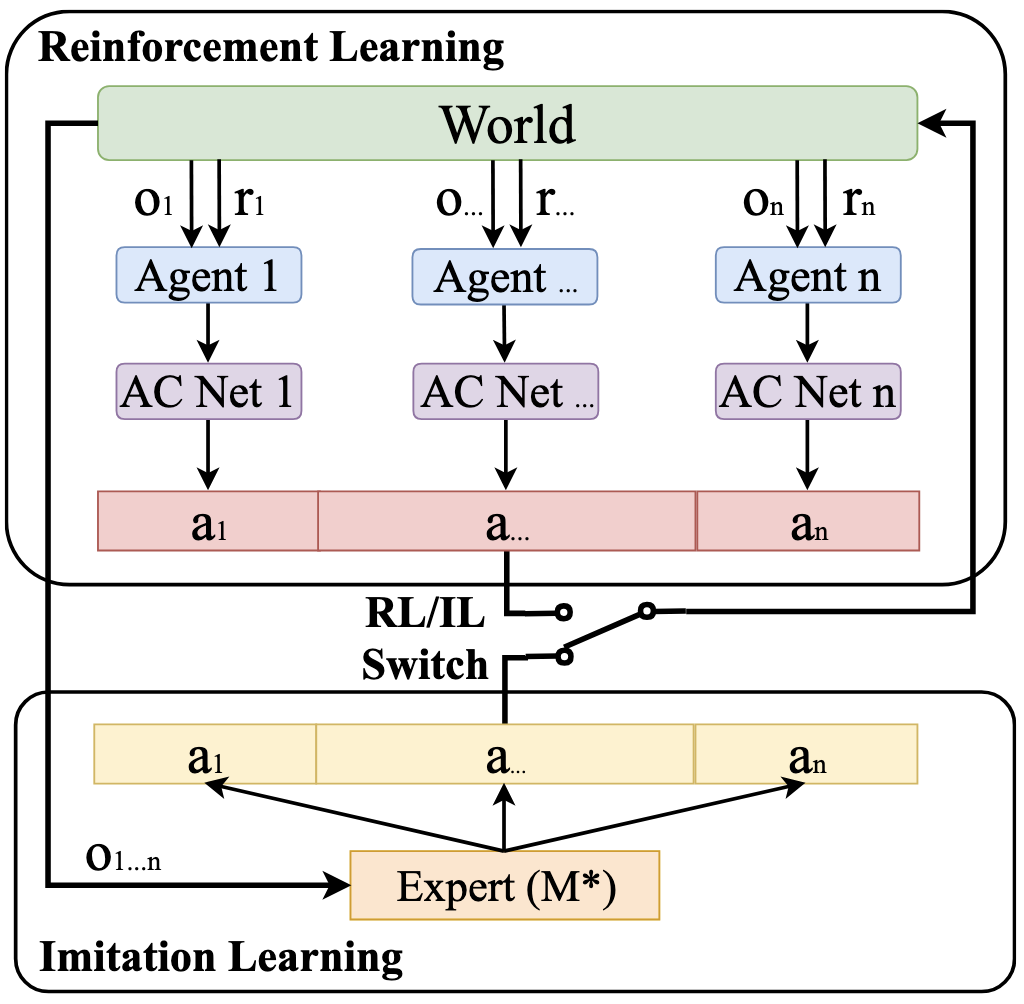

PRIMAL: Pathfinding via Reinforcement and Imitation Multi-Agent Learning

Guillaume Sartoretti, Justin Kerr, Yunfei Shi, Glenn Wagner, T. K. Satish Kumar, Sven Koenig, Howie Choset

IEEE Robotics and Automation Letters 2019

arXiv A novel framework for multi-agent pathfinding that combines reinforcement and imitation learning to teach fully decentralized policies, validated with up to 1024 agents.

Robot Systems Projects

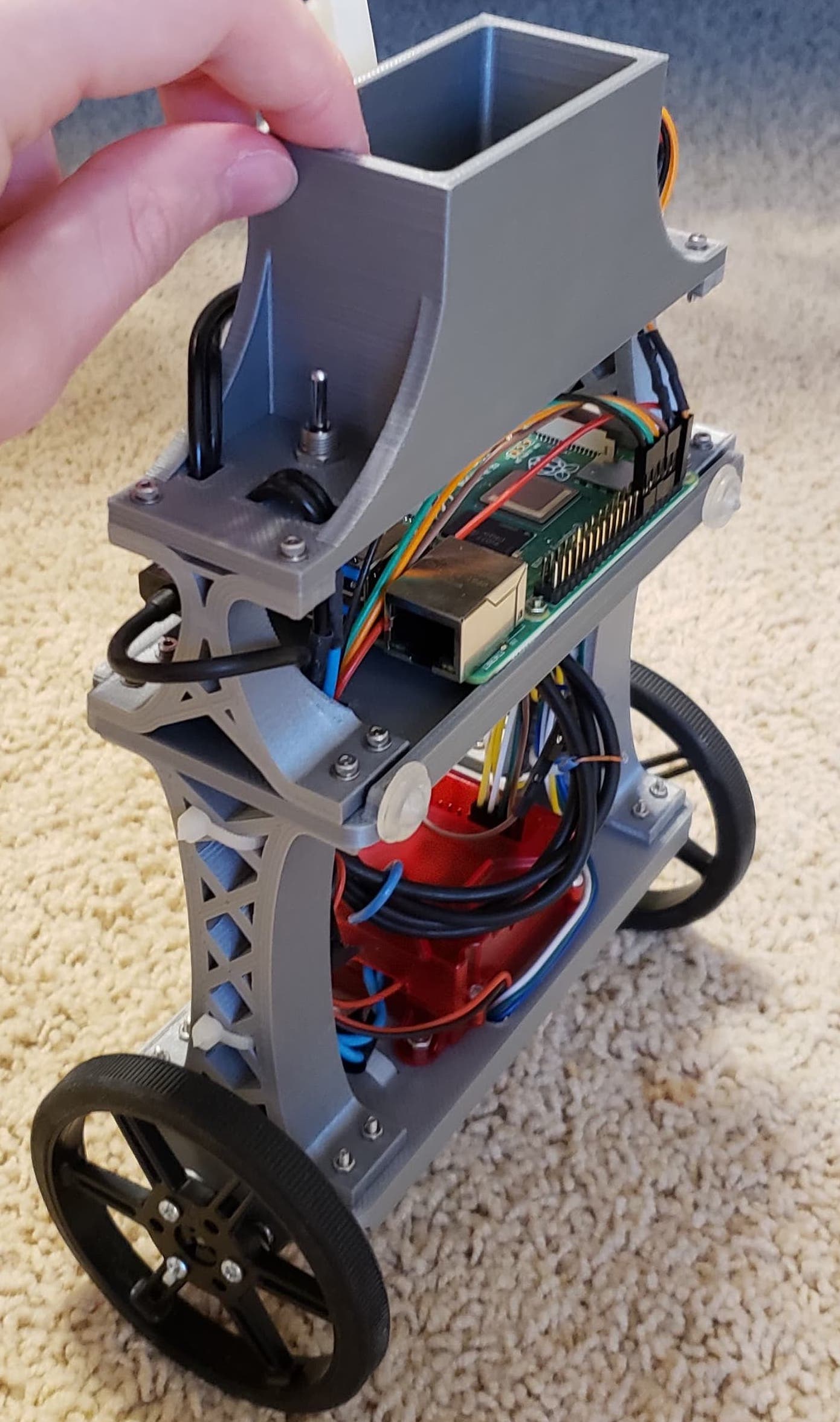

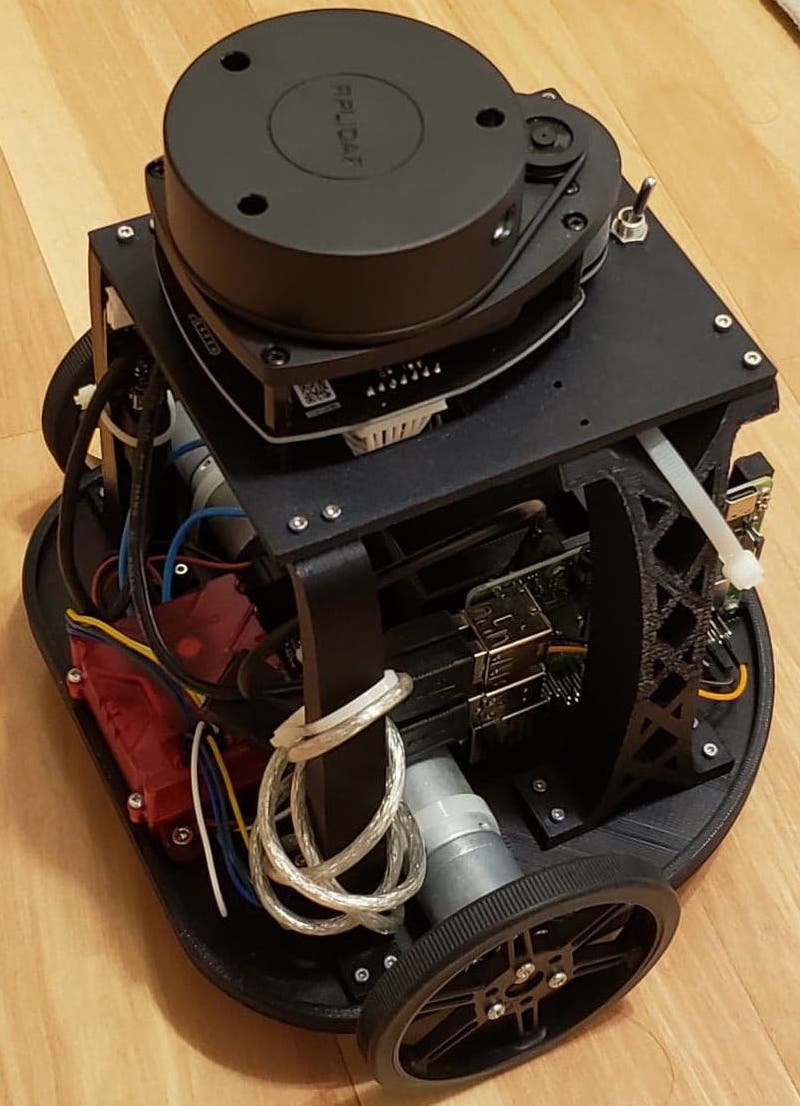

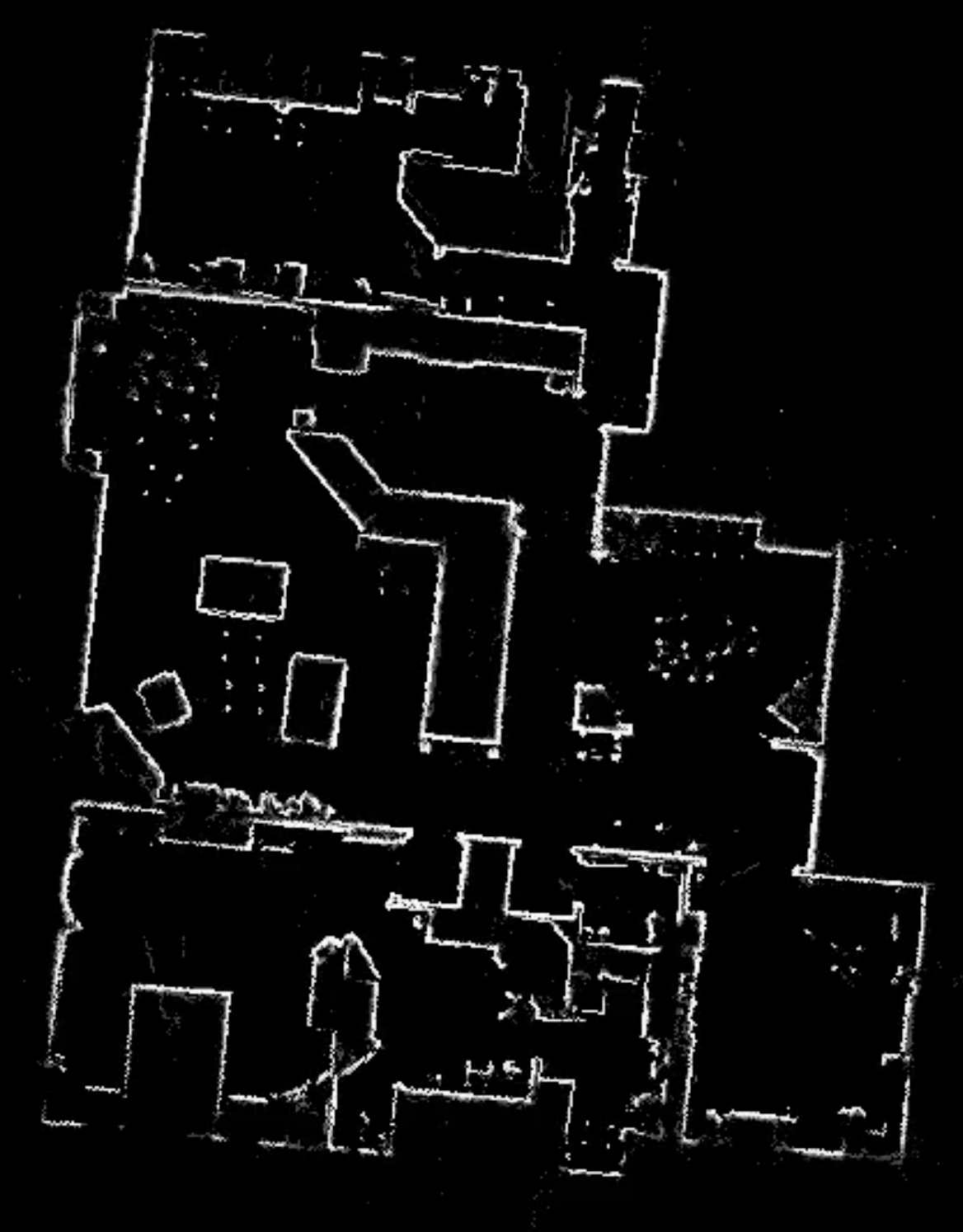

The result of lots of COVID boredom, I designed, built, and programmed these (mostly) from scratch